1. Hayek and the Perceptron Controversy: F. A. Hayek's Forgotten Contribution to Neural Networks*

1.1 In 1988, there occurred independent citations of F. A. Hayek from research in computer science. One from Cowan and Sharpe in their recapitulation of the current state of research on neural networks. The other from Miller and Drexler on their comparison of computational ecologies with markets. These citations occurred after a long hiatus. Hayek had been recognized as a valuable input into research of elementary neural networks, for Frank Rosenblatt's perceptron, on several occasions between 1958 and 1961.

1.2 In his discussion published as "The Sensory Order after 25 Years", Hayek notes that aside from some "sensible and sympathetic reviews . . . I have since had practically no indication of any response to my thesis by the psychological profession (1982, 389)." While one can, with effort, find scattered citations of The Sensory Order in discussion of neurology, recognition of the Sensory Order in computer science ceased amongst those at the center of artificial intelligence research after 1961. After that, aside from a few dissertations and one british researcher, F. H. George, recognition of Hayek's relevance amongst authors in artificial intelligence ceases. Amongst leading researchers in artificial intelligence, recognition ceased entirely.

1.3 This leaves two questions that deserve consideration. The first question is fairly straightforward. Why was Hayek recognized by researchers in artificial intelligence in the first place? The second question, why was Hayek's contribution disregarded by researchers in artificial intelligence, is less obvious and, to my knowledge, has not been adequately developed. It is this question with which I concern myself. Answering this question, I correct a misconception concerning Hayek's role in the development of neural networks: namely, that Hayek's work on neural networks when unnoticed because he lacked the technical capacity to express his ideas (Axtell 2016; Mirowski 2002). However, these explanations have not been substantiated but are reasonable assertions that fit with the author's prior assumptions concerning Hayek and his relation to the field. Hayek's aloofness from advances in computing and artificial intelligence, in a personal sense, did not benefit Hayek. By the time his work was picked up by neural network researchers, Hayek had returned to thinking about integrating the complexity that undergird The Sensory Order into methodology supporting analysis of economy, law, and society in general (Caldwell 2014; see for example Hayek 1960; 1967).

1.4 I evidence a more nuanced story with regard to the lack of recognition received by Hayek. I begin with the more recent rediscovery of Hayek to develop context of the mystery at hand. I follow by outlining Hayek's participation in and recognition among early research in artificial intelligence. I then identify the culprit as partly a mixture of social and institutional context. Hayek found himself 1) on the bad side of a dispute concerning the significance of Gödel's undecidability proof and 2) in the midst of the connectionist paradigm that would be deemed, by leaders of AI research who preferred symbolic AI, a dead end or, at least, unfruitful in light of computing and funding constraints. The result was more than two decades where Hayek's work failed to receive further recognition amongst researchers in artificial intelligence. Hayek's place in this dispute is a challenge to Mirowski's conceptualization of Hayek as participating in the mechanization of the human agent with the cyberneticists. I close with a brief description concerning how the revival of Hayekian insights in computer science and artificial intelligence inform our understanding of more than two decades of silence. This is also where the story opens.

2. The Rediscovery of Hayek

2.1 In September of 1989, Don Lavoie, with the support of graduate students, launched the "Agorics" project. Of course, Lavoie was familiar with Hayek as he had earned his Ph.D. at New York University studying under Israel Kirzner (Boettke 2023). Earlier in the decade he had written a respectable critique of Marxian economics in his work on the socialist calculation debate. Before he studied economics at NYU, Don Lavoie had studied computer science and was employed as a programmer. By a wonderful coincidence, Hayek had been rediscovered by computer scientists Mark S. Miller and K. Eric Drexler. This was brought to Lavoie's attention after a recommendation of their work by Lavoie's graduate student, Bill Tulloh. In articles published in The Ecology of Computation, Miller and Drexler had, amongst other aspects of his paradigm, recognized the relevance of the Hayekian perspective for informing program architecture (Miller and Drexler 1988a; 1988b).

A central challenge of computer science is the coordination of complex systems. In the early days of computation, central planning - at first, by individual programmers - was inevitable. As the field has developed, new techniques have supported greater decentralization and better use of divided knowledge across a program's operations. Chief among these techniques has been object-oriented programming, which in effect give property rights in data to computational entities. Further advance in this direction seems possible. (Miller and Drexler 1988b, 163).

2.2 In a letter written to the authors the following year, Lavoie described his interaction with the work of Miller and Drexler as being like "a religious experience." Lavoie proposed that "[a]goric systems development could presumably benefit from the discovery process that would take place if we could open up some intellectual exchange between our centers." The authors would respond. After meeting with the authors and Phil Salin, that latter of whom was apparently responsible for introducing Miller and Drexler to F. A. Hayek, Lavoie, Baetjer, and Tulloh (1990) would refer to them as "coauthors with us . . . since the whole is a a summary of our discussions with them."[1].

2.3 Amidst the long list of citations provided in the "High Tech Hayekians" article, one stands out as particularly significant. Jack Cowan and David Sharp cite Hayek in the article, "Neural Nets and Artificial Intelligence". The article was republished in 1989 in the reprint of the Daedalus issue, "The Artificial Intelligence Debate: False Starts and Real Foundations" and was originally published the year previous in Deadalus. It was also published with slight variations in the Quarterly Review of Biophysics.

2.4 This citation is fascinating for several reasons. First, Jack Cowan was part of the community involved in neural networks around the time that Hayek's The Sensory Order, had been cited, along with Donald Hebb's The Organization of Behavior, by Frank Rosenblatt (1958, 1961) in his work on the Perceptron. This was a landmark development in neural networks that is broadly recognized as significant (e.g., see Russel and Norvig 1995 20-22, 761; Crevier 1993, 102-7; McCorduck 2004, 104-107; Mitchell 2019, 24-32). Further, Hayek was cited by Alice M. Pierce in 1959 in the annotated bibliography, "A Concise Bibliography of the Literature on Artificial Intelligence". Pierce notes appreciation for "Professor Minsky for selecting items to be included in this publication" and to Allen Newell who "kindly provided a copy of his bibliography for a course on Information Processing given in the Spring of 1959". So at the time, either Minsky and Newell, or perhaps both, were aware of The Sensory Order, likely owing to his publication in Rosenblatt (1958). In 1961, Minsky published "A Selected Descriptor-Indexed Bibliography to the Literature on Artificial Intelligence", which, likewise, included Hayek's The Sensory Order (Minsky 1961a). The manuscript had been received for publication in 1960. Minsky casts a broad net, including nearly 600 references. In presenting the bibliography Minsky notes that:

This bibliography is intended . . . to present some information about this literature. The selection was confined mainly to publications directly concerned with construction of artificial problem-solving systems. Many peripheral areas are omitted completely or represented only by a few citations. The classification system is not particularly accurate. The descriptive categories that we have selected do not always permit very sharp distinctions and not always useful ones. There are surely many errors in the assignments of papers to the categories, both for those I have not read, and for those which I did not fully understand. (Minksy 1961, 39)

2.5 Minsky recognized F. A. Hayek as a potential contributor to the development of neural networks. Why, then, is Hayek's The Sensory Order not widely recognized amongst researchers in computer science and, in particular, artificial intelligence?

3. F. A. Hayek Cast Out by Marvin Minsky

3.1 If this was the last reference of Hayek by Minsky, this could be viewed as being not particularly meaningful. Again in 1961, Minsky published his follow-up, casting a verdict with regard to Hayek's contribution in his "Steps Toward Artificial Intelligence". He was rejected by Minsky. At its core, Minsky's decision to discard Hayek, thereby diminishing Hayek's influence, was in response to Hayek's use of Gödel's undecidability proof as support for acceptance of limitations on the ability of machines and intelligent agents to develop self-understanding (Hauwe 2011).

3.2 I outline Minsky's reference of Hayek in full since it is a fundamental part of my thesis. Hayek appears twice. The first appearance is in the footnote at the end of the following sentence paragraph:

But we should not let our inability to discern a locus of intelligence lead us to conclude that programmed computers therefore cannot think. For it may be so with man, as with machine, that, when we understand finally the structure of and program, the feeling of mystery (and self-approbation) will weaken. We find similar views concerning "creativity" in [60]. The view expressed by Rosenbloom [73] that minds (or brains) can transcend machines is based, apparently, on an erroneous interpretation of the meaning of the 'unsolvability theorems' of Gödel.

3.3 Although Hayek is not mentioned, those familiar with Hayek's proof will be aware of the conflict with Hayek's view since this is much along the lines of Hayek's assertion that a mind cannot fully understand itself. Footnote 38, which appears at the end the last sentence, reflects Minsky's concern about certain cognitive interpretations of Gödel's Undecidability Proof:

On problems of volition we are in general agreement with McCulloch [75] that our freedom of will "presumably means no more than that we can distinguish between what we intend [i.e., our plan], and some intervention in our action." See also MacKay ([76] and its references); we are, however unconvinced by his eulogization of "analogue" devices. Concerning the "mind-brain" problem, one should consider the arguments of Craik [77], Hayek [78] and Pask [79]. Among the active leaders in modern heuristic programming, perhaps only Samuel [91] has taken a strong position against the idea of machines thinking. His argument, based on the fact that reliable computers do only that which they are instructed to do, has a basic flaw; it does not follow that the programmer therefore has full knowledge (and therefore full responsibility and credit for) what will ensue. For certainly the programmer may set up an evolutionary system whose limitations are for him unclear and possibly incomprehensible. No better does the mathematician know all the consequences of a proposed set of axioms. Surely a machine has to be in order to perform. But we cannot assign all the credit to its programmer if the operation of a system comes to reveal structures not recognizable or anticipated by the programmer. While we have not yet seen much in the way of intelligent activity in machines, Samuel's arguments in [91] (circular in that they are based on the presumption that machines do not have minds) do not assure us against this. Turing [72] give a very knowledgeable discussion of such matters. (Minsky 1961b, 27 $n_{38}$)

3.4 As with the bibliography published by Minsky, this footnote on its own seems not to critique Hayek, whose name is even included after Craik, an author whose psychology and methodology influenced both Hayek and Minsky (see, for example, Minsky 1965; 1968). However, if you read carefully, you see that only Samuel, who published work using artificial intelligence to play checkers, is recognized as an "active leader in modern heuristic programming". A reasonable interpretation, then, is that Hayek was amongst the non-leaders. This quiet, and fair based on the descriptor of "active", critique prepares the reader for further commentary on Hayek. At the time, Hayek was did not concern himself the application of ideas developed about the nervous system and perception from The Sensory Order to society more broadly.

3.5 On the very next page, Minsky again cites Hayek, this time with regard to "Models of Oneself". Again, I provide the paragraph preceding the reference:

To the extent that the creature's actions affect the environment, this internal model of the world will need to include some representation of the creature itself. If one asks the creature "why did you decide to do such and such" (or if it asks this of itself), any answer must come from the internal model. Thus the evidence of introspection itself is liable to be based ultimately on the processes used in constructing one's image of one's self. Speculation on the form of such a model leads to the amusing prediction that intelligent machines may be reluctant to believe that they are just machines. The argument is this: our own self-models have a substantially "dual" character; there is a part concerned with the physical or mechanical environment - with the behavior of inanimate objects - and there is a part concerned with social and psychological matters. It is precisely because we have not yet developed a satisfactory mechanical theory of mental activity that we have to keep these areas apart. We could not give up this division even if we wished to - until we find a unified model to replace it [emphasis mine]. Now, when we ask such a creature what sort of being it is, it cannot simply answer "directly:" it must inspect its model(s). And it must answer by saying that it seems to be a dual thing - which appears to have parts - a "mind" and a "body." Thus even the robot, unless equipped with a satisfactory theory of artificial intelligence, would have to maintain a dualistic opinion on this matter. (Minksy 1961b, 28)

3.6 The key point of this paragraph could be easily missed, so I have added emphasis showing that Minsky appears to anticipate that, at some point, there will be developed a "unified model" that can replace the dualistic model of cognition. Exactly such a dualist model is presented by Hayek who claims that "we shall permanently have to be content with a dualistic view of the world (8.47)." After setting the stage in the previous pages, Minsky finally clarifies his evaluation of Hayek's position:

There is a certain problem of infinite regression in the notion of a machine having a good model of itself: of course, the nested models must lose detail and finally vanish. But the argument, e.g., of Hayek (see 8.69 and 8.89 of [78]) that we cannot "fully comprehend the unitary order" (of our own minds) ignores the power of recursive description as well as Turing's demonstration that (with sufficient external writing space) a "general-purpose" machine can answer any question about a description of itself that any larger machine could answer. [2].

3.7 The author references paragraphs 8.69 and 8.79 from Hayek (1952), which are as follows:

8.69 The proposition which we shall attempt to establish is that any apparatus of classification must possess a structure of a higher degree of complexity than is possessed by the objects which it classifies; and that, therefore, the capacity of any explaining agent must be limited to objects with a structure possessing a degree of complexity lower than its own. If this is correct, it means that no explaining agent can ever explain objects of its own kind, or of its own degree of complexity, and therefore, that the human brain can never explain its own operations. This statement possesses, probably, a high degree of prima facie plausibility. It is however, of such importance and far-reaching consequences, that we must attempt a stricter proof.

8.79 An analogous relationship, which makes it impossible to work out on any calculating machine the (finite) number of distinct operations which can be performed with it, exists between that number and the highest result which the machine can show. If that limit were, e.g., 999,999,999, there will already be 500,000,000 additions of two different figures giving 999,999,999 as a result, 499,999,999 pairs of different figures the additions of which give 999,999,998 as a result, etc. etc., and therefore a very much larger number of different additions of pairs of figures only than the machine can show. To this would have to be added all the other operations which the machine can perform. The number of distinct calculations it can perform therefore will be clearly of a higher order of magnitude than the highest figure it can enumerate.

3.8 One can imagine that the statement that follows immediately after was even more offensive to Minsky:

8.80. Applying the same general principle to the human brain as an apparatus of classification it would appear to mean that even though we may understand its modus operandi in general terms, or, in other words, possess an explanation of the principle on which it operates, we shall never, by means of the same brain, be able to arrive at a detailed explanation of its working in particular circumstance, or be able to predict what the results of its operation will be. To achieve this would require a brain of a higher order of complexity, though it might be built on the same general principle. Such a brain might be able to explain what happens in our brain, but it would in turn still be unable to fully explain its own operations, and so on.

3.9 Hayek, an outsider to both the study of neurology and computer science, here takes it upon himself to establish the bounds of the domain of concern. Again, recall that Hayek was "not an active leader" (Minsky 1961b). As we see from the nature of Minsky's work cited so far and will continue to see, this was the role taken up by Minsky. In this manner, Minsky helped organize the Dartmouth conference which played a role in developing community amongst the earliest researchers in Artificial Intelligence, including Herbert Simon, Allen Newell, John McCarthy, Claude Shannon, Ross Ashby, Warren McCulloch, and Arthur Samuel. As Hofstadter student Melanie Mitchell explains, Dartmouth served as a landmark event. "[T]he big four 'founders' of AI, all strong devotees of the symbolic [AI] camp, had created influential - and wel-funded-AI laboratories: Marvin Minsky at MIT, John McCarthy at Stanford, and Herbert Simon and Allen Newell at Carnegie Mellon (2019, 31)." Minsky was an "active leader" and sought to influence the direction of the field. The content of Minsky's critique of Hayek was part of a broader program to steer research in artificial intelligence. Critique of the content of Hayek's discussion of Gödel would show up across the next decade Minsky's work. However, he never mentions Hayek by name after this attack on Hayek's "Philosophical Consequences".

4. Between Consistency and Decidability

4.1 What follows is a series of references from Minsky to content related to the The Sensory Order, but where Hayek is never cited. Typically, Minsky will instead cite Donald Hebb (1949). Perhaps ironically, Minsky writes in 1962, just one year after the publication of his attack on Hayek:

It seems likely that stimuli which occur together frequently become associated in some way with special components of the internal state. There is a clear need for a theory of this kind of formation. What are reasonable conditions for such a grouping to deserve recognition as a pattern with a special "recognizer" or, perhaps, realization of a "cell-assembly" in a neural structure? (Hebb, 1949) There seems to have been very little analysis of "association" types of learning.

4.2 Anyone familiar with The Sensory Order will be left with at least a modicum of surprise at this statement. Hayek spends a significant amount of energy describing how various neural responses are shared. In fact, describing learning through association was what motivated Hayek's initial research on the topic three decades before writing The Sensory Order! In The Sensory Order, which is the target of Minsky's critique, Hayek writes:

2.49 The point on which the theory of the determination of mental qualities which will be more fully developed in the next chapter differs from the position taken by practically all current psychological theories is thus the contention that the sensory (or other mental) qualities are not in some manner originally attached to, or an original attribute of, the individual physiological impulses but that the whole of these qualities is determined by the system of connexions by which the impulses can be transmitted from neuron to neuron; that it is thus the position of the individual impulse or group of impulses in the whole system of such connexions which gives it its distinctive quality; that this system of connexions is acquired in the course of development of the species and the individual by a kind of 'experience' or 'learning'; and that it reproduces therefore at every stage of its development certain relationships existing in the physical environment between the stimuli evoking the impulses.

4.3 This description has much in common with Hebb's "cell assembly". In introducing his idea of "cell assembly", Hebb writes, "It is proposed first that a repeated stimulation of specific receptors will lead slowly to th formation of an 'assembly' of association-area cells which can act briefly as a closed system after stimulation has ceased; . . . The assumption, in brief, is that a growth process accompanying synaptic activity makes the synapse more readily traversed. (1949, 60). Barry Smith states the process with clarity:

Some patterns of stimuli will tend to occur frequently together. This, Hayek hypothesis's, will tend in turn to bring fibres and it will tend to lead also to the formation of especially dense connections to corresponding central neurons. (Smith 1997)

4.4 And many others have continued to recognize the link between Hayek and Hebb, likely through the encouragement of the Rosenblatt citation, with regard to computation in neural networks as well as a description of the process of classification provided by the nervous system (Simmons 1963; Nagy 1962; 1990; Edelman 1982; Marsh 2010, 122).

4.5 In a similar vein as his critique in the 1961 paper, in "Matter, Mind and Models", published in 1965, Marvin Minsky elaborates his concerns about methodological dualism with regard to the mind-body problem with greater clarity. First, he attempts to present the possibility of a man (or agent) modeling oneself:

When a man is asked a general question about his own nature, he tries to give a general description of his model of himself. That is, the question will be answered by $M^{**}$ [i.e., one's model of the model of oneself]. The extent that (1) $M^*$ [i.e., one's model of himself] is divided as we have supposed and (2) [i.e., in the sense of the mind-body split] that the man has discovered this - that is, this fact is represented in $M^{**}$, his reply will show this.

His statement [his belief] that he has a mind as well as a body is the conventional way to express the roughly bipartite appearance of his model of himself.

Because the separation of the two parts of $M^*$ is so indistinct, and their interconnections are so complicated and difficult to describe, the man's further attempts to elaborate on the nature of this mind-body distinction are bound to be confused and unsatisfactory. . . . From a scientific point of view, it is desirable to obtain a unitary model of the world comprising both mechanical and psychological phenomena. Such a theory would become available, for example, if the workers in Artificial Intelligence, Cybernetics and Neurophysiology all reach their goals. (Minsky 1965, 2-3)

4.6 Here, Minsky envisions that an aim of the general movement of computation and cybernetics is to provide "a unitary model" that joins together the "mechanical and psychological". At this point, Minsky articulates the problem but fails to provide a solution. As he notes at the start of his paper, [t]he paper does not go very far toward finding technical solutions to the questions, but there is probably some value in finding at least a clear explanation of why we are confused (1)." His development of the argument about agents developing recursive models that aid self-understanding never really proceeds to the heart of Hayek's application of Gödel's Undecidability Proof to the mind as a computational device. After a bit of meandering, Minsky appears to critique the view, expressed by Hayek, that the pattern of classification expressed by the neurons and families of neurons should not be directly applied to describe category analysis that is part of human cognition (i.e., Hayek's "practical dualism").

In everyday practical thought physical analogy of metaphors play a large role presumably because one gets a large payoff for a model of apparently small complexity. (Actually, the incremental complexity is small because the model is already part of the physical part of $W^*$ [i.e., the model of the world]). It would be hard to give up such metaphors, even though they probably interfere with our further development, just because of this apparent high value-to-cost ratio. We cannot expect to get much more by extending the mechanical analogies, because they are so informational in character. Mental processes resemble more the kinds of processes found in computer programs - arbitrary symbol-associations, tree-like storage schemes, conditional transfers and and the like. In short, we can expect the simpler useful mechanical analogies will survive, but it seems doubtful that they can grow to bring us usable ideas for the parallel unification of $W^*$. (Minsky 1965, 3)

4.7 From the mid 1960s, Minsky's increasingly focused on symbolic AI (for example, see Minsky 1968; Minsky 1991). In his article, "Artificial Intelligence", he focuses entirely on rule-based reasoning as means to problem solving and plan formation (1966). Around this time Minsky seems to have given up on his developing the unified model of consciousness, though he did not give up the belief that a unified model best described reality and, therefore, that "practical dualism" is not a terminal state for human understanding. One may notice that this Minsky's movement from neural networks to symbolic AI resembles Hayek's "practical dualism", though without the embrace of the strong epistemological assertion concerning the limits of self knowledge. In the article only reference to neural networks is Minsky's inclusion of McCulloch and Pitts (1943) as being part of a path-breaking trio of publications, the others being from Craik and from Weiner, Rosenbleuth, and Bigelow. His preference for symbolic analysis is reflected in his discussion of planning, which neural nets, on their own, lack:

Finally, we should note that in a creature with high intelligence one can expect to find a well-developed special model concerned with the creature's own problem-solving activity. In my view the key to any really advanced problem-solving technique must exploit some mechanism for planning - for breaking the problem into parts and allocating shrewdly the machine's effort and resources for the work ahead. This means the machine must have facilities for representing and analyzing its own goals and resources. One could hardly expect to find a useful way to merge this structure with that used for analyzing uncomplicated structures in the outer word - nor could one expect that anything much simpler would be of much power in analyzing the behavior of other creatures of the same character. (Minsky 1965, 3)

4.8 This concern with machines capable of planning also returns us to Minsky's concern about Hayek's interpretation of Gödel. Hayek's statement that humans are not capable of self-understanding carries the implication that an individual machine, also, is not capable of a complete understanding of itself. This, of course, is more clearly represented by Turing's halting problem, which is another iteration of the discrepancy between completeness and consistency, challenged the Hilbert program. That is, not all statements generated from set of axioms that support a sufficiently complex system of logic can be proven. Some statements (and some computer programs) must remain undecidable:

The notion of a machine containing a model of itself is also complicated, and one might suspect potential logical paradoxes. There is no logical problem about the basic idea, for the internal model could be very much simplified, and its internal model could be vacuous. But, in fact, there is no paradox even in a machine's having a model of itself complete in all detail! For example, it is possible to construct a Turing machine that can print out an entire description of itself, and also execute an arbitrarily complicated computation, so that the machine is not expending all its structure on its description. In particular, the machine can contain an interpretive program which can use the internal description to calculate what the machine would do under some hypothetical circumstance. Similarly, while it is impossible for a machine or mind to analyze, from moment to moment precisely what it is doing at each step (for it would never get past the first step) there seems to be no logical limitation to the possibility of a machine understanding its own basic principles of operation or, given enough memory, examining all the details of its operation in some previously recorded state.

With interpretative operation ability, a program can use itself as its own model, and this can be repeated recursively to as many levels as desired, until the memory records of the state of process get out of hand. With the possibility of this sort of introspection, the boundaries between parts, things and models become very hard to understand. (63-64)

4.9 Minsky seems to be talking in circles. A computer can understand itself with simplified models. But, apparently, it could also print a completed description of itself. But this complete description may be encumbered by memory limitations. On and on. Of course, machines can perform self-reflection to some extent. For example, a machine may monitor particular vital signs that it needs to self-regulate in order to maintain internal temperature. A machine can list details of components in its memory. However, as Minsky recognizes, complete description of the system from moment to moment is not a capability of a machine. There will always be some blindness from which the machine suffers.

4.10 Suppose, for example, that a machine did attempt to print the entirety of contents saved on its short- and long-term storage components. The act of printing itself changes the internal state of the machine. The strong-claim that Minsky would like to resolve is terribly strict. I do not wish to overstate the case. mainstream view is that computability problems only cover a small portion of the space of problems of concern to computational theorists, a point with which Minsky's general research program concurs. Of course, there also exists significant opposition to this (Axtell 2000, 17; Chaitin et al 2018; Deveraux, et al., 2024; Doria 2017; Rosser Jr 2012). A notable advocate of Hayek specific position concerning self understanding Douglas Hofstadter whose book, Godel, Escher, Bach motivated a generation of programmer in artificial intelligence (Hoftsadter 1980; see also Hauwe 2011, 396). Still, computers can perform many tasks that might qualify as self-reflection. But all of these tasks are limited. They do not reflect a complete understanding of the machine by the machine itself.

4.11 Minsky dedicates his book "Computation: Finite and Infinite Machines" to an elaboration of the possibilities of computation and the delineation of the limits of Gödel's Undecidability and Turing's Halting Problem. Minksy outlines the operations of machines along the lines (i.e., with some variation) presented in Alan Turing's (1936) description of the Halting Problem. In chapter 4, Minsky shows that neural nets are, themselves, finite state machines. And he clarifies, in a subsection titled "Epistemological Consequences", that his theory of machines extends to a theory of the brain in light of McCulloch and Pitts (1943):

In their classic paper, McCulloch and Pitts [1943] make some observations about the consequences, for the theory of knowledge, of the proposition that the brain is composed of basically finite-state logical elements. And even though this may not be precisely the case, the general conclusions remain valid also for any finite-state or probabilistic machine, presumably including a brain (Minsky 1967, 96).

4.12 Minsky aims to show the capabilities of such machines in light of the limitations identified by Gödel (1931), Church (1936), and Turing (1936), an aim for which he succeeds, but sometimes waivers with regard to the strictness of presentation. In responding to objections that minds, since they are "superior to all presently known mechanical processes must, by their intuitive nature, escape any systematic description", Minsky simply responds that "Turing discusses some of these issues in his brilliant article, 'Computing Machines and Intelligence' [1950], and I will not recapitulate his arguments. They amount, in my view, to a satisfactory refutation of many such objections (Minsky 1967, 107)." Minsky presents Turing usage of the term "state of mind" to refer to the state of a machine in presentation of a worldview that asserts what it is trying to prove.

4.13 Minsky was moving on from this problem as it was resolved to his satisfaction, though the resolution was not by any means proven. Minsky viewed the human mind as a machine whose determinism must not be undermined by a gap in understanding that cannot directly translate mechanisms of the nervous system to conscious reasoning. Commentary about this is mixed in with proofs and technical analysis. For example, in passing as he discusses the Halting problem, he weakens criterion of Turing, noting that "there is nothing absurd about the notion of a man contemplating a description of his own brain (148)." The object of concern here is a different, if related, object than the concern of the halting problem. Given the lack of theoretical depth of Minsky's objection, disagreement with Hayek largely appears to be largely semantic and prior assumptions, which is to say, statements of faith.

4.14 Not only did Minsky denounce Hayek, but his coauthor, Papert, would in some sense sponsor another attack on Hayek: this time by James G. Taylor (1962) in his description of the sensory order, The Behavioral Basis of Perception. Papert had been a coauthor of Taylor (Taylor and Papert 1956) in a description of human learning where visual perception is distorted.

4.15 Taylor dedicated the entire last chapter of his book to an attack on F. A. Hayek's "Philosophical Consequences" (chapter 8 in The Sensory Order). Taylor even named his own chapter, "Philosophical Consequences". The chapter is itself not particularly impressive, containing winding narratives from Taylor that do more to present his own views of mechanism underlying human perception than to provide a careful rebuttal of Hayek's philosophical position. (Perhaps it is due to the arguments quality that, as far as I am aware, Minsky never cited Taylor's book.)

4.16 Taylor argued that Hayek's focus on the individual brain removed the human agent from the context of society that serves as judge of the validity of ideas, thereby making Hayek's critical view of self understanding inconsequential. Taylor fails to confront Hayek's argument on philosophical grounds, consistent with his confession that "I have neither erudition nor skill in the field of philosophy" (Talor 1962, 338). Taylor goes as far as to accuse Hayek of treating commonplace mental models differently than scientific mental models, a point that Hayek (1943) clearly objects to in "The Facts of the Social Science". Taylor spends only a small portion of the chapter confronting Hayek's argument about methodological dualism, saying that "essence of consciousness is simultaneity of knowing", thus defining the problem of the emergence of consciousness out of existence (Taylor 1962, 361). Although Taylor's argument is not impressive, the proximity of Papert to Taylor is consistent with a pattern that Minsky's argument against Hayek was of concern to those around him.

4.17 There is a bit of irony in Taylor failing to account for the breadth of Hayek's views, presumably because he had simply failed to read his other works. Taylor had actually contacted Hayek in January of 1953 with a handwritten letter four pages in length. [3]. There he writes about The Sensory Order in light of his own manuscript:

The important, and encouraging part is that two people, working quite independently of each other, and against very different backgrounds, should have arrived at theories between which there is such a substantial measure of agreement. (Taylor, January 4, 1953, 2-3)

4.18 There, he also indicates his plan for a chapter on "philosophical implications of my theory, just as you have done". In September of the same year, Taylor even offered "to try to persuade the organisers [of the International Congress of Psychology] to arrange for a symposium on 'The foundations of theoretical psychology,' with Hebb, you and me as the symposiasts." He continues in his letter, [t]he time is ripe for a determined attack on the lazy eclecticism which permits so many psychologists to 'explain' learning in terms of behaviourist theory, perception in terms of Gestalt theory, dreams and neuroses in terms of psychoanylitic theory, and so on (Taylor, September 16th, 1953)."

4.19 Hayek was not the best correspondent. He answered Taylor in March, indicating that he hoped to read the book in the summer as he had spent his time in the previous months "trying to elaborate the argument of my book into the foundations of a theory of communication"(Hayek, March 23, 1953). When Taylor contacted Hayek again in September to inquire if he would be interested in a panel with Hebb and Taylor, Hayek indicated interest and also advised that he still had not read Taylor's book (Hayek, October 15, 1953). Hayek again inquired in January 1954 and appears to have received no further response concerning the conference (Hayek, January 2, 1954). I have located no such panel in any scholarly article or conference report. Whether or not his intention, Hayek seems to have communicated a lack of interest. Taylor seems to have responded with a lack of enthusiasm for the correspondence.[4]

Hayek and Finite State Machines

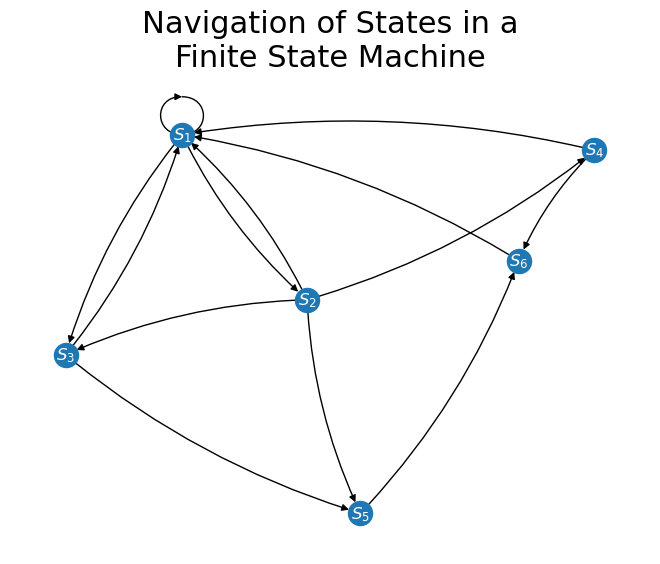

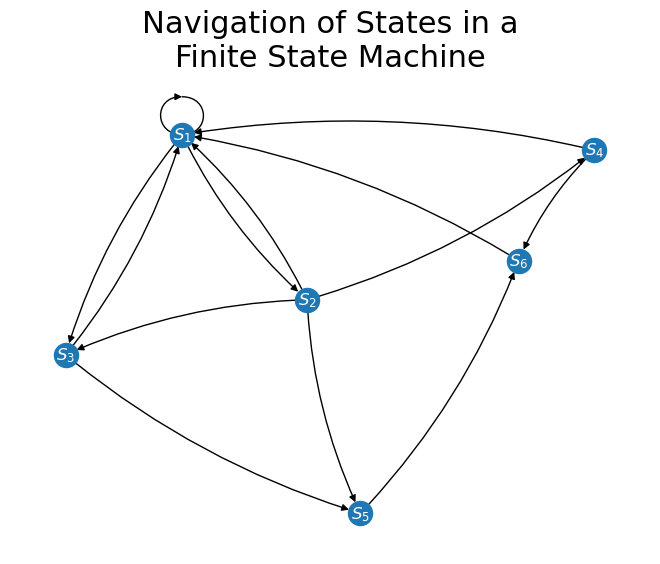

4.20 Although Hayek had moved on from neural networks, it is noteworthy that as early as early as 1952, Hayek was writing about systems that bear great similarity to finite state machines. Recall that Minsky's (1967) book was titled: Computation: Finite and Infinite Machines. Minsky was referring to finite state machines and envisioned justification for formulating infinite machines. For discussion here, we will focus on the finite state machine.

4.21 A finite state machine is an abstraction of a node that interacts with other inputs in the environment, including inputs from human beings. The finite state machine updates its current state based on a limited set of responses available from the state of the finite machine. A similar conceptualization is the Markov chain, which indicates the probability that a node in a given state transitions to any other available state or remains the same. A finite state machine will typically adjust the state of the system based on a set of rules, which can include, but does not strictly need to include, transition probabilities.

$$Figure\ 1$$

4.22 Hayek describes finite state machines where agent states are updated based upon context that includes other agents. Hayek begins the discussion with emphasis on communication. This is the theory of communication that he references in his March 1953 letter to Taylor. Communication includes consequences of action in general.

3 Although most of our discussion will have relevance to most types of mental phenomena, we shall here concentrate on communication and particularly description, because these raise in the clearest form the problems involved when we talk about the mental phenomena, and because it is for this case comparatively easy to state a test by which we can decide whether a description has been communicated by one system to another. Provisionally we shall define this by saying that a description has been communicated by one system $S_1$ to another system $S_2$, if $S_1$, which is subject to certain actions by the environment which do not directly affect $S_2$, can in turn so act on $S_2$ that the latter will as a result behave, not as $S_1$ would behave under the influence of those causes, but as $S_2$ itself, in its peculiar individual position, would behave if these causes which have acted on $S_1$ had acted directly on $S_2$.

4.23 To say that $S_2$ "will as a result behave . . . as $S_2$ itself, in its peculiar individual position, would behave" indicates that the state of $S_2$ has been influenced by the action of $S_1$ (for related discussion, see Hayek 1955, 214-215). Using an example to clarify, Hayek elaborates with reference to particular circumstance at the end of the manuscript:

. . . [T] is no generically new difficulty if certain parts of the environment should, e.g., require a different mode of propagation, offer the prospect of faster or slower advance, or indicate the necessity of some preparatory action of a different kind (e.g., removing an obstacle) if progress in the direction aimed at is to be achieved. This will include what we commonly call the "use" of objects of the environment as "means" for reaching the goal, be it merely the choice of a surface on which it is easier to move or the employment of a "tool" (e.g., using a log as a bridge). All that is required is a sufficient richness of the apparatus of sensory classification as to be able to classify the effects of all the alternative combinations of actions and external circumstances which can arise from the initial situation.

Among the objects of the environment which will influence the action of $S_1$ will be $S_2$. Among the "knowledge" of $S_1$, i.e., among the alternative courses of events represented in it, there will be included the representation that "if $S_2$ joins in the chase, the prey will change its direction so as to become easier to catch", that "if $S_2$ sees the prey, it will join in the chase", and "if I ($S_1$) show symbols representative of the kind of prey, the place and direction of its movement, $S_2$ will act as if it had seen the prey."

Finally, every part of the information which $S_1$ has to transmit $S_2$ in order to cause $S_2$ in the fashion which $S_1$ expects to be helpful in catching the prey, refers to classes which the two systems must be able to form in order to perform the actions (other than communication) which we have assumed capable of performing. All that we have to assume to explain the transmission of information is that the representatives of the classes which are formed in evaluating the environment can also be evoked by symbols by another.

4.24 Any node that interacts with other nodes and changes states based on those interactions can be described as a finite state machine. Experienced game developers regularly employ the abstraction of the finite state machine to define the capabilities of the human player, a non-player computer, objects in the environment virtual (for example a door or portal that is locked [$S_1$] or open [$S_2$]), and so on. The example of a video game, then, is useful for clarification as these are basically simulations that allow for human inputs. When you select the Start option for the newest Super Mario, the game transitions to a different state where player entries into the controller implement different effects on objects in the game, i.e., the player can make Mario run, jump, dive, kick, and so forth. For another example, if player health falls to a significantly low level or a button is pressed for a significant period of time without interruption from the environment, some games endow the player's character with special powers. And often, a player who takes damage from another player or expresses a special power will be unable to access the full array of responses that are typically available from the resting state. We see in the case of interaction with another player or non-player computer, some state transitions require another party. Or perhaps, consistent with Hayek's example of a log, a player learns the skill of balancing that would be required to use the log as a bridge. Now the player can cross a gap in the environment, moving on to new scenarios that may activate further new states. Of course, everything describe here applies to agent-based computational models (Vriend 2002).

4.25 Different than a video game, a good theory is a highly abstract state machine that exhibits external validity in the domain of interest. Hayek's abstract presentation of theory of communication is consistent with framing interactions in terms of finite state machines. Hayek's thought is distinguished from emerging work in computation in that it highlighted the limits of understanding. Hayek sought to convey the interdependence that exists within and between systems, but he thought as an abstract theorist. The insights from which Hayek drew, however, were generated from insights in the field of computation. These early insights were informed by Weaver, Bertalanffy, and Woodger on organized complexity (Caldwell 2014, 15-16; Lewis 2014).

4.25 Despite Hayek's thinking in a similar direction 15 years before Minsky's publication of Computation, the theoretical contribution that could complement discussion of artificial intelligence, cognition, and communication remained a $20 bill on the sidewalk that, in light of the narrative developed so far, others were discouraged from picking up.

5. Machine Learning or Artificial Intelligence?

5.1 Reasonably, Minsky did not want studies in machine intelligence to be limited to neural nets. Hayek's contribution was also part of this domain. In the same article that Minsky (1961b) critiqued Hayek's views on decidability, shutting him out of the discussion on neural networks, Minsky also begins his quest to subvert the neural network program altogether. In another footnote, Minsky indicates:

Work on 'nets' is concerned with how far one can get with a small initial endowment; the work on 'artificial intelligence' is concerned with using all we know to build the most powerful systems that we can. It is my expectation that, in problem-solving power, the (allegedly brain-like) minimal-structure systems will never threaten to compete with their more deliberately designed contemporaries. (Minsky 1961, 26, $n_{36}$)

5.2 Minsky softens the blow, somewhat, noting "nevertheless, their study should prove profitable in the development of component elements and subsystems to be used in the construction of the more systematically conceived machines." This early date is at odds with what Mikel Olazaran refers to as the "official history" of the development of artificial intelligence, which goes that "in the mid 1960s Minsky and Papert showed that progress in neural nets was not possible, and that his approach had to be abandoned. In Jeremy Bernstein's 1981 interview of Minsky for the New Yorker, he follows precisely this view:

In the middle nineteen-sixties, Papert and Minsky set out to kill the Perceptron, or at least, to establish its limitations - a task that Minsky felt was a sort of social service they could perform for the artificial-intelligence community. For four years, they worked on their ideas, and in 1969 they published their book "Perceptrons".

. . .

"There had been several thousand papers published on Perceptrons up to 1969, but our book put a stop to those," Minsky told me.

5.3 Certainly after the publication of Perceptrons, Minsky was clear in his unwillingness to support and developments in neural networks. After meeting with Minsky seeking support for a neural network project as a graduate student in the early 1970s, Paul Werbos recalls rejection by Minsky, noting that he "was clearly very worried about his reputation and his credibility in his community (Anderson and Rosenfeld 1998, 344)." Several years after the New Yorker interview, Minsky and Papert would deny that their work was directly responsible for the fading popularity of neural networks among researchers. In retrospect, Papert would claim that:

Yes, there was some hostility in the energy behind the research reported in Perceptrons, and there is some degree of annoyance at the way the new movement has developed; part of our drive came, as we quite plainly acknowledged in our book, from the fact that funding and research energy were being dissipated on what still appear to me (since the story of new, powerful network mechanisms is seriously exaggerated) to be misleading attempts to use connectionist methods in practical applications. But most of the motivation for Perceptrons came from more fundamental concerns, many of which cut cleanly across the division between networkers and programmers. (Papert 1988, 4-5)

. . .

Our surprise at finding ourselves working in geometry was a pleasant one. It reinforced our sense that we were opening a new field, not closing an old one. But although the shift from judging perceptrons abstractly to judging the tasks they perform might seem like plain common sense, it took us a long time to make it. So long, in fact, that we are now only mildly surprised to observe the resistance today's connectionists show to recognizing the nature of our work. (7)

5.4 And together with Minsky in the preface to the 1988 republication of Perceptrons, Papert reflects:

Why have so few discoveries about network machines been made since the work of Rosenblatt? It has sometimes been suggested that the "pessimism" of our book was responsible for the fact that connectionism was in a relative eclipse until recent research broke through the limitations that we had purported to establish. Indeed, the book has been described as having been intended to demonstrate that perceptrons (and all other network machines) are too limited to deserve further attention. Certainly many of the best researchers turned away from network machines for quite some time, but present-day connectionists who regard that as regrettable have failed to understand the place at which they stand in history. As we said earlier, it seems to us that the effect of Perceptrons was not simply to interrupt a healthy line of research. That redirection of concern was no arbitrary diversion; it was a necessary interlude. To make further progress, connectionists would have to take time off and develop adequate ideas about the representation of knowledge.

5.5 Funds for research were drying up and "connectionists would have to take time off" to develop new ideas that would strengthen their research program.

5.6 Their story is missing important details. Consistent with the citation noted at the beginning of this section, Jack Cowan recollects that Minsky and Papert began their campaign against Perceptrons in the early 1960s. His comments about the perception of neural networks growing amongst researchers in the early 1960s are consistent with Minsky's critique that neural nets focused only on "simple heuristic processes" (Minsky 1961, 26 $n_{36}$):

What came across was the fact that you had to put some structure into the perceptron to get it to do anything, but there weren't a lot of things it could do. The reason was that it didn't have hidden units. It was clear that without hidden units, nothing important could be done, and they claimed that the problem of programming the hidden units was not solvable. They discouraged a lot of research and that was wrong. Everywhere there were people working on perceptrons, but they weren't working hard on them. Then along came Minsky and Papert's preprints that they sent out long before they published their book. There were preprints circulating in which they demolished Rosenblatt's claims for the early perceptrons. In those days, things really did damp down. There's no question that after '62 there was a quiet period in the field. (Anderson and Rosenfield 1998, 108)

5.7 Minsky and Papert had clarified the limits of a single-layered Perceptron, however, these limitations had been recognized by Rosenblatt (1961) himself. In the book that represented the revival of research in neural networks, Rumelhart and McClelland reflect on the role of Minsky and Papert in narrating developments for the rest of the field:

Minsky and Papert's analysis of the limitations of the one-layer perceptron, coupled with some of the early successes of the symbolic processing approach in artificial intelligence, was enough to suggest to a large number of workers in the field that there was no future in perceptron-like computational devices for artificial intelligence and cognitive psychology. The problem is that although Minsky and Papert were perfectly correct in their analysis, the results apply only to these simple one-layer perceptrons and not to the larger class of perceptron-like models. (Rumelhart and McClelland 1987, 112).

5.8 The critique of Rumelhart and McClelland is consistent with the closing commentary of Perceptrons where Minsky and Papert express skepticism about the capabilities of multilayered neural networks:

The problem of extension is not merely technical. It is also strategic. The perceptron has shown itself worthy of study despite (and even because of!) its severe limitations. It has many features to attract attention: its linearity; its intriguing learning theorem; its clear paradigmatic simplicity as a kind of parallel computation. There is no reason to suppose that any of these virtues carry over to the many-layered version. Nevertheless, we consider it to be an important research problem to elucidate (or reject) our intuitive judgment that the extension is sterile. Perhaps some powerful convergence theorem will be discovered, or some profound reason for the failure to produce an interesting "learning theorem" for the multilayered machine will be found. (Minsky and Papert 1969, 231-232)

5.9 Given Minsky's centrality in the field of artificial intelligence, readers in the field would have understood this commentary as a signal that pursuit of other programs would be more rewarding and, perhaps, more likely to be rewarded. John Holland observed in retrospect that "John McCarthy and Marvin Minsky are both very articulate and they both strongly believed in their [symbolic] approach (Husband et al., 2008 389)." This is how he explains that:

Notions like adaptation simply got shoved off to one side so any coversation I had along those lines was sort of bypassed. My work and, for instance, Oliver Selfridge's work on Pandemonium, although often cited, no longer had much to do with the ongoing structure of the area. . . . This was the time when symbolic logic had spread from philosophy to many other fields and there was great interest in it. But even so, it's still not absolutely clear to me why the other approaches fell away. Perhaps there was no forceful advocate (Husband et al., 2008 389, 390).

5.10 Mikel Olazaran affirms this interpretation of developments[5].:

It is important to note that Minsky and Papert's work had its effect upon the controversy well before the book was published. . . . [T]hey conjectured that progress in multilayer nets would not be possible because of the problem of learning. . . . The key issue (which I try to elucidate below) is that their study was widely seen as a 'knock down' proof of the impossibility of perceptrons (and of neural nets in general) (Olazaran 1996, 628, 629).

5.11 Long before Minsky and Papert published their Perceptrons, funding for perceptron research had slowed in favor of symbolic AI. In the words of Jon Guice:

Minsky began mounting his attack in meetings at that time, and presented critical papers in 1960 and 1961. In 1962, Minsky and his colleagues landed an ARPA contract for research, which continued each year after that for over a decade. [emphasis author's] Rosenblatt was not considered for funding by ARPA, and his support from the ONR and NSF, which had never been very large compared to ARPA grants, started petering out around 1964. Minsky and Papert refined and developed arguments against perceptrons throughout the decade. (Guice 1996, 126)

5.12 Notably, ARPA funding is explicitly recognized at the end of chapter 13 in Perceptrons (1969, 246). While funding was apparently drying up, Minsky's research group consistently received favor.

Through the early 1970s, government funding for neural-net research groups in the USA did not grow. Minsky's group continued to get nearly 'a million dollars a year' from ARPA, as part of Project MAC. The published literature displayed a slow decline in references to 'perceptrons', and a rise in those to 'artificial intelligence'. (Guice 1996, 126)

5.13 Keep in mind that, adjusted for inflation, \$1 million in 1970 would be worth more than \$8 million today.

5.14 Given this tension in transition and interpretation of history of the field, we can view the neural network revolution of the 1980s manifested as dissent from the history as told by Marvin Minsky. Recognition of the Minsky's exercise of leadership and its influence on neural networks allow us to contextualize the citations of Hayek by Rosenblatt and Minsky's response. Minsky's critique of Hayek coincides precisely with the start of his campaign against perceptrons. This also gives special meaning to the citation of F. A. Hayek (1952) by Cowan and Sharpe (1988a, 1988b). In 1986, the now canonical Parallel Distributed Processing Explorations in the Microstructure of Cognition was published by David Rumelhart, James McClelland, and the PDP Research Group. The years the following 1986 represented a swift increase in momentum of research developing and applying neural networks.

5.15 Jack Cowan had been interested in machine learning and had also become familiar with cybernetics research as an undergraduate in the early 1950s. Before pursuing a Ph. D. at MIT in 1958, he spent three years working at Ferranti Labs, having been hired by J. B. Smith in 1955 to work on "instrument and fire control" (Husband, et al., 2008, 431-446). During that time "Ferranti arranged for me [Cowan] to spend a year at Imperial [College] doing a postgraduate diploma in electrical engineering." When he began his Ph. D. at MIT, he "joined the Communications Biophysics group run by Walter Rosenblith" and after about 18 months "moved to the McCulloch, Pitts and Lettvin group." That is, Cowan was at the heart of development in neural networks. It was during this time that Cowan attended the conference published as International Tracts in Computer Science and Technology and Their Application: Principles of Self-organization having presented a paper on neural networks: "Many-valued Logics and Reliable Automata". Hayek, along with Stafford Beer, John Bowman, Warren McCulloch, and Anatol Rapoport, "served as chairmen to the various sessions" (x, 135-179; see also Hancock 2024). Cowan recalls that "[t]here's no question that after '62 there was a quiet period in the field", which he attributes to "Minsky and Papert's preprints (Anderson and Rosenfeld 1998, 108). Even Cowan reduced his own efforts regarding multilayer perceptrons:

When I looked at [Widrow's] work carefully, I could see that it was essentially using the same methods that Gabor had introduced in the mid-fifties, the gradient-descent algorithm I did not put it together with my own sigmoid work, even though I had alrady[sic] been getting preprints from Minsky and Papert about their Perceptron studies. I knew from what we'd done with McCulloch that the exclusive-OR [XOR] was the key to neural computing in many ways. Bu unfortuanately, at the back of my mind I had the idea if I could work out the dynamics of analog neurons, later on I could start looking at learning and memory problems. I just put it aside.

5.16 In 1988, Cowan and Sharp would publish "Neural Nets and Artificial Intelligence" for the Daedalus special issue "Artificial Intelligence". In the article they cite F.A. Hayek's Sensory Order in referencing the Boltzmann machine which "provides a solution to the credit assignment problem for hidden units". The "Boltzmann machine can form a representation that eventually reporduces relations between classes of events in its environment (Cowan and Sharpe 1988, 102)." Hayek, here, is used as theoretical support for classification of the sort that interested Minsky. Ironically, Minsky saw this as a line of inquiry separate from Neural Networks. In forward looking comment from the same section, Cowan and Sharpe note that the Boltzmann machine "provides a way in which distributed representations of abstract symbols can be formed and therefore permits the investigation by means of adaptive neural nets of symbolic reasoning." The modern reader is likely aware that, since the neural network revolution of the 1980s, the domain of neural nets has expanded into the realm of symbolic reasoning. Indicative of this trend, Jurafsky and Martin include and entire chapter on neural networks in their leading textbook, Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition with Language Models.

6. Coda: Echoes of Hayek and Silence

6.1 I have reviewed Hayek's presence in early discussions of neural networks. He had certainly not intended to become part of discussion on topics in artifical intelligence. Hayek viewed his contribution as being specifically to understanding of the relationship between human physiology and cognitive classification, on the one hand, and a metaphor for complex systems in general, on the other (Caldwell 2004b). As content of The Sensory Order had been of interest to Hayek long before the development of artificially intelligent systems along the lines presented by Turing and von Neumann, it is appropriate to consider any contribution by Hayek to the field as serendipitous. Still, whether or not his intention, he did play a role in discussion and developments. While it is not obvious that he played a role in Minsky's fight against the neural learning and in favor of symbolic AI, this is precisely the lesson that is documented here.

6.2 This has provided new light on Robert Axtell's evaluation of Hayek's unrecognized innovations:

Reading Hayek on this subject [cognition and neural networks] from perspective of modern cognitive science, once again, seemingly in the position of Hayek have anticipated certain modern developments. But without either significant mathematical development or the luxury of the later literature to advance his ideas, his work, although prescient, seems to have gone unnoticed by the later innovators within psychology and cognitive science. For example, there is no reference to Hayek in the important early cognitive science text of Newell and Simon (1972). I think Hayek's contribution in this area is less substantive than methodological Given that this book was written in the heyday of behaviorism in psychology (Skinner, 1953), its focus on brain and mind as bottom-up, distributed processes was usefully out-of-step with psychology researchers of his day, closer to the working being done by mathematicians, engineers, cyberneticists, and so on who were working with what today we might call biologically grounded models of cognition (Samsonovich, 2012). It would take a generation for the 'parallel, distributed processing' (PDP) perspective to take off (McClellan & Rumelhart, 1986; Rumelhart and McClelland, 1986) and even longer for a research program close to Hayek's vision to become popular (e.g., Cacioppo, Visser, & Pickett, 2006; Dennett, 1995), although once again, I am not aware that any of these later writers cite Hayek.

Today there are researchers who know what Hayek wrote on this topic and even some pursuing topics in cognition from a perspective commensurate with his. For example, "The Hayek Machine" is a task learning system that lives in a simulated "block world" and which solves problems using very little information about its environment (Baum, 1999). More recently, large-scale computational efforts to model whole brains "from the bottom up" (Markram, 2006, 2012) are very much in the spirit of Hayek's ideas in this large, broad area, but his actual influence appears to be small. (Axtell 2014, 90)

6.3 Hayek was not simply forgotten or accidentally unrecognized. The extent of his recognition was delimited for its significance in the battle against the perceptron program. It is worth noting that Axtell's advisor, Herbert Simon, who was part of AI's "big four 'founders'", recognizes only a limited contribution from Hayek in his Sciences of the Artificial, noting that:

No one has characterized market mechanisms better than Friederich von Hayek who, in the decades after World War II, was their leading interpreter and defender. His defense did not rest primarily upon the supposed optimum attained by them but rather upon the limits of the inner environment the computational limits of human beings. (Simon 1996, 34)

6.4 Simon does not go into Hayek's works on complex systems.

6.5 Recognition of Hayek is not widespread amongst AI researchers. Citation of Hayek by Cowan and Sharpe, Miller and Drexler, and in "High-tech Hayekians" seem to have amounted to little in the way of generating recognition of Hayek's contribution to neural networks. These citations are notably absent from Axtell's discussion of Hayek. And although Baum (1999) cites Miller and Drexler for their inspiration with regard to the "Hayek Machine", there is little recognition of Baum's work amongst economists. He is not cited by Vriend's inquiry into whether or not Hayek was an ACE (Vriend 2002). Neither is he cited in reflections on "Frederich Hayek and the Market Algorithm" published in the Journal of Economic Literature (Bowles, et al., 2017). Even I had somehow missed Baum's relevance to my own work (Caton 2017; 2020). And again, in the new literature on economic calculation and artificial intelligence, recognition of Hayek's contribution to neural networks remains unrecognized in this latest wave of discussion on the topic (Boettke and Candela 2023, Bickley et al., 2022; Gmeiner and Harper 2024; Acemoglu 2023). There exists divisions across the academy. Without knowledge of various specialized fields, one is simply unable to recognize topically connections in the literature. If dramas like the one told here unfold in interdisciplinary domains that are inscrutable to experts, they will likely remain hidden beneath the technicalities of academic discussion.

6.6 We may also evaluate Mirowski's critique of Hayek from a new light. Mirowski argues that, by adopting the methods of the cyberneticists, Hayek's work was subject to the determinism that is common. Models following the work of McCulloch and Pitts (1943) turns the human being into a finite-state machine whose future is predetermined with proper knowledge of the machine's present state and inputs from the environment. This determinism leads Mirowski and Nik-Khah to assert that it would be along the lines of Hayekian thought to treat the marketplace "as one vast Turing Machine, with agents simply plug-compatible peripherals of rather diminishing capacities (119)." However, this is not quite accurate. If the marketplace is conceived of as a machine, it is a machine whose states are determined by the activity and states of its agents. While the authors are correct to note that "[t]he history of this research program reveals that certain aspects of the neoclassical model were shown to be Turing non-computable (119)" (see Axtell 2005), it would be more modest to simply refer to the economy as a state machine. In theory, it could be a Turing machine (i.e., lacking memory constraints), but any simulation would of the economy would treat it as a finite-state machine. The state of this state machine state is determined by the states of a multitude of state machines (agents) with diverse preferences, strategies, knowledge, and so forth. Supposing that we did model the economy as a Turing machine if the will of agents is wholly deterministic, the outcomes of individual actions and their aggregation parallel the halting problem in the sense that an entrepreneur cannot know whether or not his or her plan will succeed ex ante (Knight 1920; Foss and Klein 2012). Ignorance, not determinism, is the key feature underlying discussion. Hayek's concerns about local knowledge and degrees of explanation enabled, and not, by high level abstractions are not strictly dependent upon determinism that denies free will. In fact, discourse in computer science recognizes that since some problems - those referred to as NP-Complete - cannot be optimized ex ante, there would require a non-deterministic Turing machine that searches all possible paths for the solution of the problem.

6.7 Mirowski also claims that "however much Hayek wanted to portray knowledge as distributed across some structure, he maintained an implacable hostility to statistical reasoning throughout his career, a stance that effectively blocked any further comprehension of perceptrons and connectionist themes." This is a rather simplistic view of Hayek and seems to suggest that Hayek was disconnected from developments in neural network research as a result of 1) a his own preference against technical modeling and 2) lack of sophistication. Yet, in the article that was the declaration defining his own transformation, Hayek expresses admiration for technical sophistication (Caldwell 2004a, 205-209):

My criticism of the recent tendencies to make economic theory more and more formal is not that they have gone too far, but that they have not yet been carried far enought to complete the isolation of this branch of logic and to restore to its rightful place the investigation of causal processes, using formal economic theory as a tool in the same way as mathematics. (Hayek 1937, 35)

6.8 As I have claimed with regard to Hayek's modeling in "Within Systems and about Systems", a modeling of agents and systems as nested finite-state models would be perfectly consistent with this view. Unless one can show with utmost certainty that, in the real world, the choice of model governing one's action is governed deterministically - meaning that the actor has no true choice in the matter - one need not be worried by the strict logic of computation where agents are cyborgs. That is just the nature of computational modeling and predictive modeling in general, not a stricture on the existence of free will. One bothered by this fact might take refuge in recognition that, although deterministic, through variation in random number generation, with each iteration a computational model can generate unique paths that exhibit a broad range of variation. For NP-complete problems, this is the only means to attempt to approximate a soultion to the problem. Rather than depend upon an ontological assertion concerning free will, Hayek grounds his respect for autonomy of local agents in the recognition that learning and communication of that learning across a complex system is facilitated exactly by a respect for the individual in society. Hayek's lack of presence was neither due to "hostility" toward technical modeling nor due to a lack of sophisticiation. His participation was actively blocked by the grandfather of artificial intelligence, Marvin Minsky.

6.9 Still, we must recognize Hayek's lack of active participation in these discussions and general lack of awareness of development in artificial intelligence and computation. When asked about John von Neumann's cellular automata during his interview with Weimer, Hayek responds:

I wasn't aware of his work, which stemmed from his involvement with the first computers. . . . I met John Von Neumann at a party, and to my amazement and delight, he immediately understood what I was doing and said that he was working on the same problem from the same angle. At the time his research on automata came out, it was too abstract for me to relate it to psychology, so I really could't profit form it. but I did see that we had been thinking on very similar lines. (Weimer and Palermo 1982, 322)

6.10 It is unclear whether Hayek is referring to von Neuymann's "Probablistic Logics and the Systhesis of Reliable Organisms from Unreliable Components" or the paper from the Hixon Symposium, "The General and Logical Theory of Automata". The precise serial logic of computing machines was likely unfamliar to Hayek in either case. It is unfortunate that Hayek shows a lack of familiarity with Neumann's book that contains significant elaboration on the functioning of the human brain and nervous system, The Computer and the Brain which is written in terms that surely would have been more familiar to him (Neumann 1958). Hayek's lack of awareness of this latter text seems consistent with a general lack of awareness of developments in computing.

6.11 In the decade that elapsed between Hayek's publication of The Sensory Order and Minsky's expression of judgment against Hayek's approach, Hayek's interests had moved on from neurology and focused on complex orders at a higher level of abstraction. His work on "Within Systems and about Systems" and subsequent publication of "Degrees of Explanation" suggest that this change occurred nearly immediately (Hayek 1955). This, in addition to the lack of general interest in The Sensory Order by the time he had published "Degrees of Explanation" mean that Hayek's own interests and incentives guiding those interests were simply not in line with the new field of Aritificial Intelligence that followed the 1954 Dartmouth Conference. The publications that followed across the next decade or so include "Kinds of Rationalism", "Notes on the Evolution of Systems of Rules of Conduct", "The Theory of Complex Phenomena", and "Rules, Perception, and Intelligibility", each of which appear in Studies in Philosophy, Politics, and Economics. While they bear general similarities to computational theory with regard to complexity and hierarchical organization, these works were not in conversation with of work developed by researchers in neural networks or symbolic AI. Hayek's lack of active participation in the field where researchers showed interest in his work played an import role in the success of Minsky's critique of Hayek.

*

I am indebted to Bruce Caldwell for conversations and advice throughout the development of this project, without which this paper would not be possible. My pursuit of this project is a consequence of his suggestion to investigate the conference on self-organization in which Hayek participated in 1961. I also owe appreciation to Bill Tulloh, who was happy to share about his experience with the "High-tech Hayekians" decades ago.

1.Tulloh would go on to found Agoric Systems with Mark Miller: https://papers.agoric.com/team/. In a personal exchange, Tulloh indicated to me that not only was Phil Salin was responsible for introducing Hayek to Miller and Drexler, which is noted in "High-tech Hayekians", but Salin was also familiar with Law and Economics literature that had been developing during the 1970s and 80s (Letter from Phil Salin to Bill Tulloh).

2.The reader should note that this supposes that we are excluding internal changes that occur in the process of such a description being generated and communicated by the machine.

3. This corrspondence can be found at Hoover Institution's Friederich A. von Hayek Papers, Box 52, Folder 33, Taylor, James G. 1953-1954: https://oac.cdlib.org/findaid/ark:/13030/kt3v19n8zw/

4.In a footnote from his "Philosophical Consequences" chapter, Taylor does note that Hayek admits to being unable to identify the mechanism that coordinate collective responses of neurons and groups of neurons to external event:

I am happy to report that in a recent conversation with me Professor Hayek wholeheartedly accepted the proposition that a mechanism for determining the following of any impulse is required. (1962, 355)

Taylor writes that the conversation was "recent". If this refers to a date close to the publication Taylor's book, then this would indicate that it was part of a different discussion, which appears not be have been part of Hayek's responses in this exchange. Without further evidence we cannot be sure.

5.Y. Lui's essay, "The Perceptron Controversy", is an especially informative synthesis of the sources that I found helpful.

$$Figure\ 1$$

$$Figure\ 1$$